Accidental Renaissance

2023-10-14

One interesting place to look for nice photographs is the Accidental Renaissance subreddit. It is a forum where photographs that resemble Renaissance art, or other art movements that existed between the 14th and 19th centuries, are shared.

Photo of part of a kitchen in renaissance style, generated using mage.space.

A related question is how to decide on what counts as Renaissance (or Baroque, Romanticist, etc) art for the photographs that would fall under the Accidental Renaissance subreddit. This is especially true for people with no in-depth knowledge on art movements. With the great results that deep learning based image classifiers can achieve and how easy it is using libraries such as fast.ai to implement them, I thought it would be a good idea to create a solution for this question. In this article I aim to build a model that given a photo can decide whether a photograph could belong in the Accidental Renaissance subreddit.

We are going to use two sources of data for this purpose. One is a collection of photos from the Accidental Renaissance subreddit, from which we can learn what makes for a good "Accidental Renaissance" photo. The other is a group of photos that are likely to be not accidental renaissance. For this we will use the following image data set from Kaggle. This dataset contains images categorized into the following groups: Architecture, Arts and Culture, Food and Drinks and Travel and Adventure.

With these two datasets we can start to create the classifier using fast.ai.

First part is importing the required dependencies.

import fastai

from fastai.vision.all import *

from random import sample

from ipywidgets import interact

import torch

Next we define the paths for the images in the datasets.

all_path = "/mnt/c/data/accidental/"

renaissance_path = "/mnt/c/data/accidental/renaissance"

architecture_path = "/mnt/c/data/accidental/architecture"

art_and_culture_path = "/mnt/c/data/accidental/artandculture"

food_and_drinks_path = "/mnt/c/data/accidental/foodanddrinks"

travel_and_adventure_path = "/mnt/c/data/accidental/travelandadventure"

While fast.ai has a function to gather all the image files from a directory, the version used for this experiment did not gather image files with the .webp extension. As many image files from Accidental Renaissance subreddit have a .webp extension, we will create a custom function to get all images files, including those of this type.

def get_all_image_files(path, recurse=True, folders=None):

"Get image files in `path` recursively, only in `folders`, if specified."

return get_files(path, extensions=[".webp", ".jpeg", ".gif", ".jpg"], recurse=recurse, folders=folders)

The files of each category can be retrieved using the above function and the relevant paths.

renaissance_files = get_all_image_files(renaissance_path)

architecture_files = get_all_image_files(architecture_path)

art_and_culture_files = get_all_image_files(art_and_culture_path)

food_and_drinks_files = get_all_image_files(food_and_drinks_path)

travel_and_adventure_files = get_all_image_files(travel_and_adventure_path)

Now that we have the list of files, it would be great to see how many of each type we have in the dataset.

{"Renaissance files": len(renaissance_files),

"Architecture files": len(architecture_files),

"Arts and Culture files": len(art_and_culture_files),

"Food and Drinks files": len(food_and_drinks_files),

"Travel and Adventure files": len(travel_and_adventure_files)

}

The above code will give use the following result:

{'Renaissance files': 135,

'Architecture files': 8763,

'Arts and Culture files': 8531,

'Food and Drinks files': 7849,

'Travel and Adventure files': 8800}

It seems we have far more images in each category of the Kaggle dataset than those from the Accidental Renaissance subreddit. There are many possible ways to deal with an unbalanced dataset, but here we go for a simple sampling based solution. We create a sampled dataset from the Kaggle dataset that is as large as the image set of the subreddit, that we call our "regular" image set.

sampled_regular_files = sample(architecture_files + art_and_culture_files + food_and_drinks_files + travel_and_adventure_files, 135)

Next we set up two directories, one for the renaissance files and one for the regular files respectively. We make sure to create the directories learning/renaissance and learning/regular if they do not yet exist. If they do exist already, the files within them will be deleted (which makes rerunning the experiment easier).

def delete_files(directory):

for filename in os.listdir(directory):

file_path = os.path.join(directory, filename)

if os.path.isfile(file_path):

os.remove(file_path)

learning_dir = "learning"

learning_renaissance_dir = "learning/renaissance"

learning_regular_dir= "learning/regular"

if not os.path.exists(learning_renaissance_dir):

os.makedirs(learning_renaissance_dir)

else:

delete_files(learning_renaissance_dir)

if not os.path.exists(learning_regular_dir):

os.makedirs(learning_regular_dir)

else:

delete_files(learning_regular_dir)

With the directories in place we can copy all the files into their respective directories as the final part of our dataset setup.

for file in sampled_regular_files:

shutil.copy(file, learning_regular_dir)

for file in renaissance_files:

shutil.copy(file, learning_renaissance_dir)

We can double check things to see if everything went well. First we check if the amount of files in the directories add up.

files = get_all_image_files(learning_dir)

len(files)

This should return 270. We can also check and remove any files that could not be read as image files using the following:

failed = verify_images(files)

failed.map(Path.unlink)

Next we are going to set up a Datablock to get everything ready to run the learners using fast.ai. The categories will be labelled renaissance and regular.

def label_function(o):

parent_name = Path(o).parent.name

if parent_name == "renaissance":

return "renaissance"

else:

return "regular"

data_block = DataBlock(

blocks=(ImageBlock, CategoryBlock),

get_items=get_all_image_files,

splitter=RandomSplitter(valid_pct=0.2, seed=4),

get_y=label_function,

item_tfms=[RandomResizedCrop(128, min_scale=0.3)]

)

dls = data_block.dataloaders(learning_dir)

We can check our datablock setup by showing a batch of images and their labels from it.

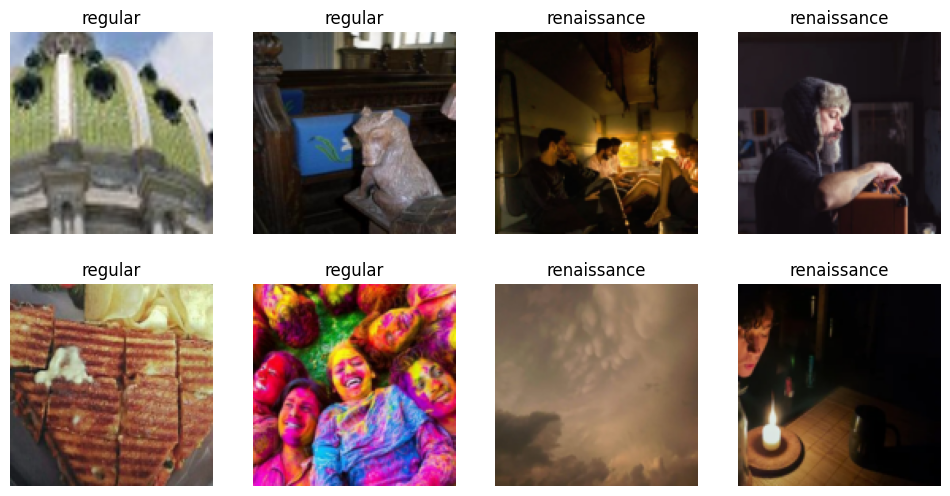

dls.show_batch(max_n=8)

A batch of the datablock showing examples of the image dataset with their labels.

The above code will return a batch such as this, if all went well.

Now that we have the datablock set up we can do the learning. We are going to use the resnet34 model as a base with 5 iterations of fine tuning.

learn = vision_learner(dls, resnet34, metrics=error_rate)

learn.fine_tune(5)

After 5 iterations, this particular model got a 0.486287 loss on the training set, a 0.595300 loss on the validation set and an error rate of 0.203704.

So how does the model actually do in practice? One interesting way to evaluate it is to generate a few photos in a renaissance art style, with the nice AI image generation tools available. I have generated a few photos using mage.space where I aimed for modern objects in a renaissance style, similarly to what might be photographed and posted in the Accidental Renaissance subreddit.

For a generated photo of a kitchen in renaissance style the model got the right category predicted (i.e.: "renaissance"), with a probability of 0.7438.

A photo of a kitchen in renaissance style generated on mage.space.

A generated photo of a car in a renaissance style got the right category predicted with a probability of 0.8643.

A photo of a car in renaissance style generated on mage.space.

However interestingly enough the trained model has trouble recognizing actual renaissance (or baroque) paintings. The famous picture of the Last Supper by Da Vinci got classified as "regular", just as Judith Beheading Holofernes by Caravaggio.

It would be interesting to hypothesize and investigate why this could be the case. Although they might be similar in art style, the paintings and the photos in the subreddit as well as the generated photos might differ in other aspects, such as the subject matter. The learning process is likely needing additional data, as 135 photos might be too low for a well fine tuned model. We could for example use exiting renaissance or baroque art to bolster the dataset. We could also investigate data augmentation to improve the training set.