AI at Home

2023-12-16

Large Language Models (LLMs), such as those that enable Chat-GPT, have been shown to be incredibly capable for language understanding and generation tasks. With the right prompt, they can answer questions, categorize input, rewrite pieces of text, perform sentiment analysis and more.

As good as these tools are, many of them require data to be sent to a remote server and/or take some additional costs to run. This is due to the processing power required to use them and the proprietary nature of the models. However there exist models that can be run locally, even on relatively modest hardware, such as some of the LLaMA models from Meta. Such locally runnable models can enable modern AI setups fully running at home, without the need for data being sent to another party. This article is a brief introduction on how to get one these models up and running.

A generated picture of an AI helping at home.

The easiest solution that I have found for this purpose is the text-generation-webui tool. As the name suggests it enables the use of language models by a web based UI and it can do so running only on the local machine. The setup is very straightforward: clone or download the repository, run the start script for your operating system, e.g.: start_wsl.batfor running on WSL, and you are pretty much ready to go.

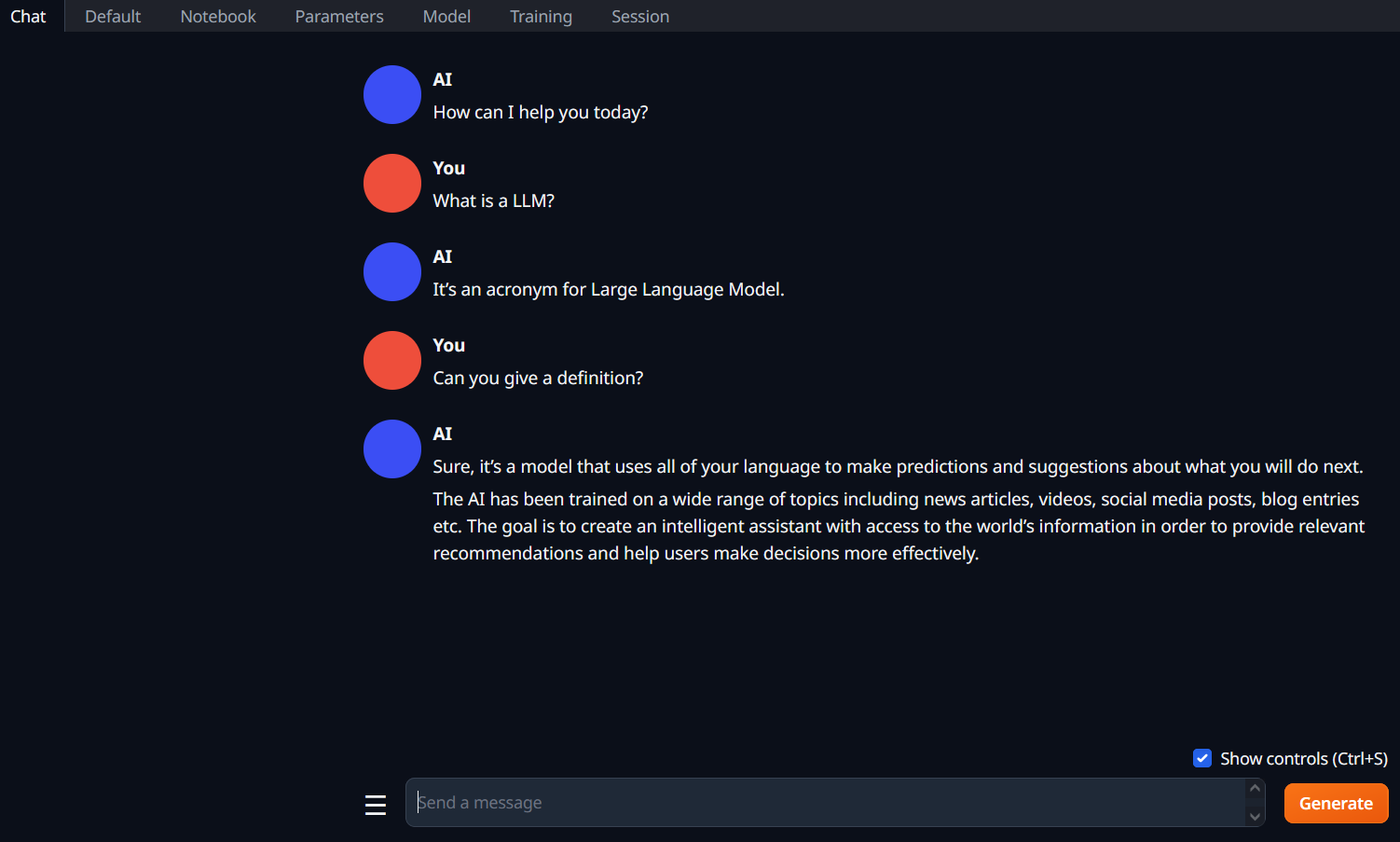

This tool can use various models, but a nice one to get started is Llama-2-7B-GGUF model provided by TheBloke, which is a 7 billion parameter LLaMa2 model. After loading it, we can simply start chatting.

A short conversation with the AI model.

Although this model is on the smaller side it runs very adequately on a 2021 model Asus G14 laptop, and functions well for simple queries and conversations.

There can be a lot of possibilities to explore with such a local setup, especially with larger, more capable models. It also provides an easy way for prototyping, as one can also use an API, that is a local drop-in replacement for the API of OpenAI, to interact with the model.

I hope this article can help you get you started in exploring your AI use cases locally!