Exploring the Deep, Part 1: Introduction

2019-02-04

Deep Learning is a field within Artificial Intelligence (AI) that has got quite a lot of attention lately, due to some truly impressive results in recent years. From recognizing objects in images with accuracy that rivals humans, to generating realistic looking texts, to even beating professional players in Starcraft 2, some truly groundbreaking applications are done with Deep Learning techniques.

As someone whose AI background is more Symbolic Artifical Intelligence, but is anxious to learn, it is a good time as any to explore this field. Thankfully there are some excellent books and tutorials out there to get a nice start.

One book that has caught my attention is Dive into Deep Learning, which seems to have a nice overview of the field, while being very practical at the same time. I aim to work through the book and share my notes, summaries and experiences on this journey in a series of articles. My goal is to write these articles for a bit more broader audience than the book itself. I hope that even those with less exposure to the field than me could follow along, and if a topic piques their interest, they can use the book for a more detailed reference point.

The first chapter that I aim to go over is the introduction to the book as well as Machine Learning in general. As Deep Learning (DL), is a form of Machine Learning (ML), an intro/refresher on the topic of Machine Learning is a natural start.

What is Machine Learning?

In most scenarios, programs are written in way where the programmer gives precise information about the problem and/or how to solve it. For example in imperative programming the program is essentially a list of statements that command the computer what to do. Even in the many forms of declarative programming, such as functional- or logic programming, providing the solution is done by explicit descriptions by the coder. The coder would describe the entirety of the problem with mathematical functions or with logical axioms respectively.

However there are scenarios where such paradigms are not adequate. Take for example a case where we where have set of photos of cats and dogs. Suppose we want need to write a program that, given a photo, can recognize the species and breed of the animal depicted. Generally it is difficult to even begin formulating such a problem in terms of the usual programming paradigms. Usually any handwritten solution we can come up with will be very brittle: it will often fail to recognize the right kind of pet from the photo, or it would only be correct for a very limited set of photos.

An alternative way to solve this problem is by letting the computer learn what kind of pet is in the photo. The computer can use a set of labelled examples where the kind of animal and breed is already known and given alongside the photo. In such cases we start with an initial, perhaps even random, model that can make this prediction. Such a model will likely be pretty bad at the start, otherwise the problem would already be solved. Then we use the labelled data to update this model. The hope is that the new model will be better at classifying animals. The intention is to keep adding data and improving the model until we decide that our model is good enough to solve our pet classification problem.

This learning from previous experiences by the machine to solve a problem is denoted as machine learning.

The recognition of dogs and cats, along with their breeds, is actually an interesting research problem in machine learning. This is due to the subtle differences between breeds making the problem difficult for computers. These images are from the The Oxford-IIIT Pet Dataset that can be used to help evaluate techniques aiming to tackle this issue. The images of this dataset are licensed under Creative Commons Attribution-ShareAlike 4.0 International License. Copyright of the images is with their original owner.

There are a large number of forms of machine learning, of which deep learning is only a particular branch, but there are common elements among the various techniques.

Elements of Machine Learning

Data

Data is the example information from which the machine learning aims to learn. In the previous scenario, the data is the labelled photos of pets.

Model

The model designates the whole process of using the data and transforming it into the goal of machine learning. In our example the model would be the full process that can take a given photo and transform it into a prediction of the pet depicted.

Objective Function

The objective function denotes how good, or bad, our model currently is. As mentioned before, the reason we perform machine learning is that we aim to let the computer learn how to solve a problem. The objective function allow us to measure the quality of our current solution, so it can be assessed whether the machine learning is progressing towards an acceptable solution.

Optimization Algorithm

The optimization algorithm that describes the process of moving from one model to a better one. This allows for the learning to happen by moving from one model to a one that better solves the problem.

Kinds of Machine Learning

Machine learning is a large field with a wide variety of techniques and potential problems to solve. Generally techniques are differentiated by the data they input and output, as well as the specific type of problem they target.

Supervised Learning

Supervised learning aims to predict targets given some input data. A good example of this is our previous scenario where we were trying to predict what type of pet is in a photo. If we have a number of photos for which we know what kind of animal is depicted in them (i.e. labeled data), we could perform such supervised learning.

Various kinds of supervised learning can be further subdivided based on what kind of target we aim to predict. Some common types of supervised learning are:

Classification

The above example is actually called a classification scenario. In general, classification problems are those where given an example, we aim to find to what particular class that example belongs to. While in the example we described the classes as the species and the breeds of pets, many other types of classes can be used depending on the problem we aim to solve.

Tagging

Consider a scenario where there could be more than one pet in the photo and we want to recognize each of them. Predicting in such cases is called tagging. The main difference between classification and tagging, is that with tagging multiple classes need to be recognized at once (e.g.: the photo can have both a dog and a cat ). This in contrast with classification, where the classes are exclusive (e.g.: dog or a cat).

Regression

The case where we want to predict a (real valued) number, for example the weight of the pet depicted in the photo, is called a regression.

Search and Ranking

Search and ranking is a scenario where we want to figure out an order between various items. For example suppose that we want to figure out popularity rankings between various breeds of dogs and cats.

Unsupervised Learning

In cases with supervised learning, a set of examples for which the result is already known must be available. Often large quantities of such information is required to get accurate results. However such information can be scarce, or simply unavailable.

Even in such cases there is a wealth of information that can still be learned from the data through machine learning. Some example techniques include:

Clustering

With the absence of clearly labelled categories given to us, we can still want to group the data into categories. This process is called clustering. For example suppose we have a set of photos depicting all sort of pets, without any labels. Here we could still aim to group similar pets into clusters.

Data Generation/Synthesis

Given a set of data, we can also aim to synthesize data that is similar to the given data. For example if we have a large set of dog photos we can also aim to generate a photo of a, non-existent, dog.

Reinforcement Learning

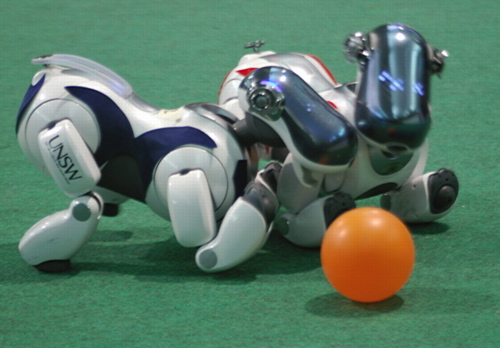

The final group of machine learning techniques that we go over in this article are those where the learning process is interacting with the environment. In reinforcement learning the main goal is to figure out what kind of action needs to be taken in a particular situation. As an example, take a scenario where you want to teach a robot dog to play football.

There process of reinforcement learning is as follows. The agent interacts with the environment through actions and observes the environment reacting to those actions. Based on the environment the agent may get rewards, either positive or negative. The rewards, together with the observations, guide the selection of subsequent actions. There is a cycle of actions, leading to observations and potentially rewards, and then again to actions. This cycle can then be repeated possibly indefinitely or until some goal is reached.

To make this description a bit more concrete, lets take our example scenario of letting a robot dog learn football. Here the agent is the decision making process of the robot dog. The environment itself is a playing field with other robots and a ball. The observations are the sensor data of this environment, such as the vision of the robot. Rewards could be given for taking control of the ball, making a successful pass and of course scoring. Negative rewards can be given for the robot missing a pass, walking off field and other undesirable actions.

Teaching four legged robots, such as the AIBO robot dogs, to play football is one of the leagues that is part of the RoboCup competition aimed at promoting robotics and AI research. This photo depicts the team in a four-legged league game from RoboCup 2006 in Breman, Germany. Public Domain Photo by Brad Hall

Reinforcement learning as a whole can be pretty complex with lots of variables based on can be known about the environment, state or actions. Subcategories of reinforcement are generally based on the given restrictions with these variables.

Towards Deep Learning

The above is a general introduction to machine learning, so the question arises how deep learning fits into the picture. Machine learning as a field has quite a bit of history with many of its techniques being laid out in the previous century. A number of such techniques, such as Neural Networks initially showed great promise but research and applications on them were languishing due to the lack data and large amount of processing power to enable such techniques. In recent years this has changed, alongside with some important advances, that led to deep learning techniques being one of the most effective approaches for various machine learning applications.

In addition the availability of frameworks for deep learning, such as MXNet, which we will use in our learning process, means that the field is very inviting for new users and applications.

Conclusion

This was a quick rundown of the first chapter. The book actually goes into much more detail, with some previews of techniques and extensive references, for the interested reader. If you have any remarks or suggestions for this series of articles please let me know! I am quite anxious for the next part when I hope to plunge deep into some coding with MXNet.